AI for Presidents

Artificial intelligence has transformed from a scientific curiosity into perhaps the most consequential technological force of our time. For presidents and national leaders, understanding AI is no longer optional—it has become essential for effective governance in the 21st century. This article provides a comprehensive overview of the current AI landscape, exploring its capabilities, limitations, national security implications, and recommendations for governmental integration.

Current State of AI Technology

Today's AI systems represent a significant evolutionary leap from their predecessors of just five years ago. The most advanced systems are built on large language models (LLMs) and multimodal models that can process and generate text, images, audio, and video with remarkable fluency.

The current AI landscape is characterized by several key developments. Foundation models like GPT-4, Claude 3, and Gemini represent the cutting edge, demonstrating sophisticated reasoning across domains from medicine to law, multimodal understanding, code generation and interpretation, and complex problem-solving capabilities. These models work by identifying patterns in data and using those patterns to predict what comes next—whether that's the next word in a sentence, the appropriate response to a question, or the next logical step in a reasoning chain.

While foundation models get much of the attention, specialized AI systems continue to excel in dedicated domains. Systems like AlphaFold for protein structure prediction, AI diagnostic tools in healthcare, autonomous vehicles, AI-powered cybersecurity systems, and financial market prediction tools often outperform even the most advanced foundation models in their specific domains.

Distinguishing Hype from Reality

The gap between AI hype and reality remains substantial, though narrowing. AI can currently generate human-quality text, images, and simple videos; engage in nuanced conversation on a wide range of topics; analyze large datasets to identify patterns and insights; automate routine cognitive tasks across industries; and provide valuable decision support in specific domains.

However, AI cannot yet demonstrate true understanding or consciousness, make autonomous strategic decisions that require moral judgment, reliably distinguish truth from falsehood without careful design, transfer knowledge seamlessly across domains (though this is improving), or function without extensive human oversight in critical applications. The most important limitation to understand is that AI systems remain tools—powerful ones, but tools nonetheless—that amplify human capabilities rather than replace human judgment.

Global Leadership in AI

The global AI landscape has evolved into a complex competitive environment. The United States maintains leadership in fundamental AI research and commercial applications, driven by tech giants like Google, Microsoft, OpenAI, and Anthropic; world-class research universities; a robust venture capital ecosystem; and strong computing infrastructure. However, the fragmented U.S. regulatory approach creates uncertainty that may hamper innovation.

China has made extraordinary progress through national strategic prioritization of AI, massive government investment, data advantages from its large population, and integration of AI into governance systems. China particularly excels in applied AI for surveillance, manufacturing, and government services.

The European Union has positioned itself as the global leader in AI regulation through the comprehensive AI Act, emphasis on "trustworthy AI," strong research institutions, and focus on AI ethics and human-centered design. The EU's approach prioritizes protecting citizens' rights and ensuring AI systems align with European values.

Other notable players include the United Kingdom, which is developing its own AI regulatory approach while maintaining strong research capabilities; Canada, which punches above its weight in AI research; Israel, which excels in AI applications for cybersecurity and defense; South Korea, leading in industrial and manufacturing AI applications; and India, emerging as a significant AI player with strong technical talent and growing investment.

The AI leadership landscape is not winner-take-all. Different nations excel in different aspects of AI development and application.

World Leaders on AI: Current Positions and 2025-2026 Plans

Several world leaders have recognized the transformative potential of artificial intelligence and have articulated visions for their nations' AI strategies.

President Donald Trump has spoken about AI primarily through the lens of economic competitiveness and national security. After returning to office in January 2025, his administration has emphasized the "AI Sovereignty Initiative" announced in February 2025, which focuses on reducing regulatory barriers for AI companies, expanding computing infrastructure investments, prioritizing AI applications in defense and border security, and creating tax incentives for companies that develop AI technologies domestically. During a March 2025 speech at MIT, Trump stated: "America will lead the AI revolution, not follow it. We're going to have the best AI, the strongest AI, and we're going to use it to make America safer and more prosperous than ever before."

Ursula von der Leyen, President of the European Commission, has positioned the EU as the global leader in responsible AI governance. Following her reconfirmation for a second term, she has emphasized the implementation of the EU AI Act. In her address to the European Parliament in January 2025, von der Leyen outlined "Europe's AI Decade" strategy, which includes full implementation of the AI Act regulatory framework by mid-2025, €20 billion in public-private investment in European AI infrastructure, creation of the European AI Office to coordinate cross-border initiatives, and launch of the "AI for Public Good" program focusing on healthcare, climate, and education applications. She remarked: "Europe will show the world that innovation and regulation are not opposites but complements. We will build AI systems that are not just powerful, but trustworthy and aligned with European values."

President Xi Jinping has consistently emphasized AI as a core component of China's technological development strategy. In speeches throughout 2024 and early 2025, he has reiterated China's ambition to become the global AI leader by 2030. China's updated "Next Generation Artificial Intelligence Development Plan" includes increasing AI R&D investment to 2% of GDP by 2026, integration of AI systems into government services across all provinces, expansion of the "Digital Silk Road" initiative to export Chinese AI technologies, and new research centers focused on foundation models and advanced computing. At the National People's Congress in March 2025, Xi stated: "Artificial intelligence represents a new frontier in the technological revolution. China must secure strategic advantages in this critical domain to ensure our national rejuvenation."

Prime Minister Keir Starmer has emphasized the UK's position as an AI research powerhouse while advocating for responsible innovation. Since taking office in 2024, his government has pursued a middle path between American and European approaches. The UK's "AI for Britain" strategy announced in January 2025 includes £5 billion investment in computing infrastructure and research, the creation of "AI Opportunity Zones" with regulatory flexibility, reform of immigration rules to attract global AI talent, and establishment of the Advanced Research and Development Agency focused on frontier AI. During the UK-hosted Global AI Safety Summit in October 2024, Starmer remarked: "Britain stands at the forefront of both AI innovation and responsible governance. We will not choose between ethical AI and competitive AI—we will demonstrate how to achieve both."

Prime Minister Narendra Modi has positioned India as an emerging AI power, leveraging the country's technological workforce and growing digital infrastructure. India's "AI for All" initiative launched in December 2024 focuses on creating specialized AI education programs targeting 10 million students by 2026, developing "India Stack for AI" — building on the country's digital public infrastructure, establishing AI research centers in each state by 2026, and launching sector-specific AI applications in agriculture, healthcare, and education. In his Independence Day speech in August 2024, Modi stated: "India missed the first industrial revolution, but in the AI revolution, India will not just participate—we will lead. Our democratic values and talent pool give us unique advantages in shaping AI for human benefit."

Prime Minister Chrystia Freeland has emphasized Canada's pioneering role in AI research while advocating for equitable approaches to AI development. Canada's "Responsible AI Strategy 2025-2030" includes doubling federal funding for AI research by 2026, creating a national AI compute cloud available to researchers and startups, implementing algorithmic impact assessments across government services, and leading international efforts on AI governance through the Global Partnership on AI. At the Toronto AI Summit in February 2025, Freeland remarked: "Canada helped birth the deep learning revolution, and now we must help ensure AI's benefits are widely shared while its risks are carefully managed. Our approach combines innovation with responsibility."

Crown Prince Mohammed bin Salman has incorporated AI prominently into Saudi Arabia's Vision 2030 economic diversification plan. The "Saudi AI Strategy 2025-2027" announced in January 2025 includes $10 billion investment in AI research facilities and computing infrastructure, the NEOM project's "Cognitive City" powered by integrated AI systems, partnerships with leading global AI companies to establish regional headquarters, and AI training programs targeting 100,000 Saudi citizens by 2026. At the Future Investment Initiative in October 2024, the Crown Prince stated: "Artificial intelligence will be the backbone of our post-oil economy. Saudi Arabia is transforming from an energy powerhouse to a global technology hub, with AI as the cornerstone of this transition."

Beyond individual national strategies, there have been notable multilateral efforts. The "Global AI Alliance" formed at the UN General Assembly in September 2024 represents the first major international framework for AI governance, with over 60 countries as signatories. The alliance focuses on establishing common safety standards for advanced AI systems, developing international coordination mechanisms for AI risks, promoting equitable access to AI benefits across developed and developing nations, and creating shared computing resources for AI safety research.

Risks and Rewards of AI

AI brings enormous potential benefits across many domains. Economically, AI can drive productivity increases across sectors, enable the creation of new industries and job categories, allow more efficient allocation of resources, and deliver personalized services at scale. Socially, AI promises enhanced healthcare through better diagnostics and treatment planning, personalized education that adapts to individual learning styles, more responsive government services, and sophisticated environmental monitoring and optimization. For national security, AI offers enhanced intelligence gathering and analysis, cybersecurity threat detection, logistics and supply chain optimization, and autonomous defense systems.

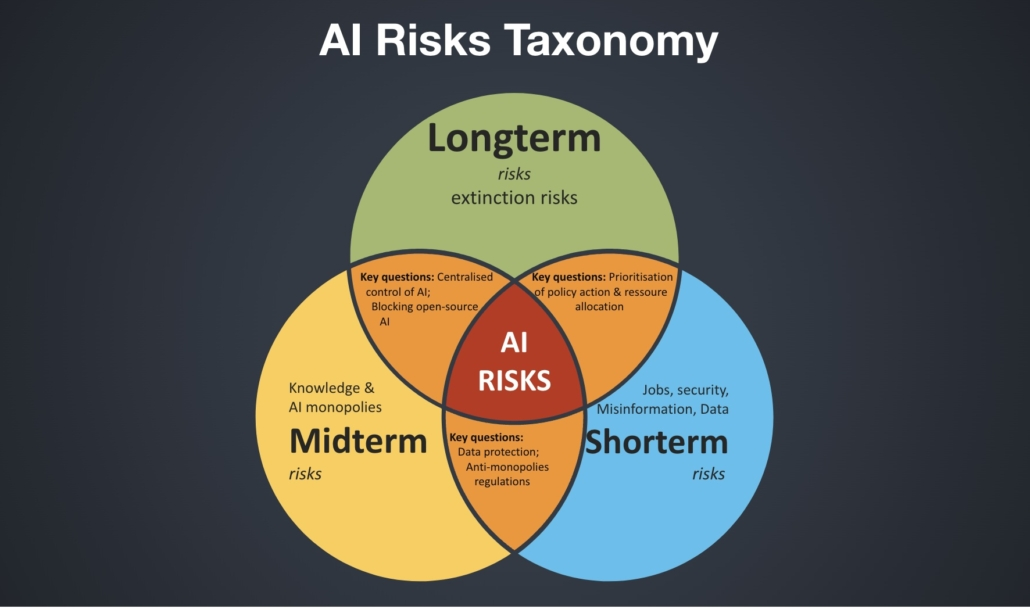

However, these benefits come with significant risks. In the short term, we face labor market disruption and displacement, the proliferation of deepfakes and AI-generated misinformation, privacy violations through advanced surveillance, algorithmic bias reinforcing societal inequities, and cybersecurity vulnerabilities in AI systems. Longer-term concerns include concentration of power in entities controlling advanced AI, autonomous weapons systems and escalation risks, strategic instability from AI-enhanced military capabilities, potential for advanced systems to act in unexpected ways, and erosion of human agency in decision-making.

While more speculative, some researchers worry about existential risks, including advanced AI systems pursuing goals misaligned with human welfare, AI-enabled bioweapons or other weapons of mass destruction, and loss of human control over critical infrastructure.

The greatest challenge for policymakers is balancing innovation against these risks while maintaining competitive advantages.

Artificial General Intelligence: The Next Horizon

Artificial General Intelligence (AGI) represents what many consider the ultimate goal of AI research—systems that possess human-like general intelligence capable of understanding, learning, and applying knowledge across virtually any domain without specific training for each task.

Unlike today's narrow AI systems that excel at specific tasks but struggle outside their training domains, AGI would demonstrate broad cognitive abilities comparable to human intelligence. This would include transferring knowledge effectively between unrelated domains, understanding causal relationships, exhibiting common sense reasoning and world modeling, adapting to novel situations without explicit training, and demonstrating true understanding rather than pattern recognition.

The research community remains divided on the timeline and approach to achieving AGI. Some researchers, particularly those associated with companies like OpenAI and Anthropic, believe AGI might emerge through continued scaling of existing foundation model approaches. This perspective suggests that with sufficient data, compute, and architectural improvements, models might eventually cross a threshold to general intelligence. This view gained credibility as large language models demonstrated emergent abilities—capabilities that weren't explicitly trained for but appeared at sufficient scale.

Other researchers, including many in academia, argue that current approaches lack fundamental components necessary for AGI. They point to crucial missing elements like symbol manipulation and abstract reasoning capabilities, causal understanding of physical and social worlds, embodied cognition and grounding in physical reality, self-directed exploration and intrinsic motivation, and consciousness or something functionally equivalent. These researchers often advocate for hybrid approaches combining neural networks with other methods like symbolic reasoning systems or neuro-symbolic architectures.

Predictions for AGI development vary dramatically. Optimists predict AGI within the next 5-10 years (by 2030-2035), particularly those working on frontier AI systems who see rapid progress firsthand. A significant portion of researchers expect AGI might emerge in the 15-30 year timeframe (2040-2055). Others believe AGI may be many decades away or might require fundamental scientific breakthroughs we haven't yet made. This uncertainty stems partly from disagreement about what constitutes AGI. Some consider systems that can perform the vast majority of economically valuable tasks as effectively "AGI-like" in practical terms, while others insist on theoretical definitions requiring human-like understanding.

The prospect of AGI presents unprecedented governance challenges. Nations increasingly view AGI as a strategic technology with profound security implications, creating complex dynamics where the first nations to develop AGI could gain significant strategic advantages, arms-race dynamics may incentivize cutting corners on safety, nations might restrict AGI research collaboration in the name of security, and international tensions could escalate around access to compute and talent.

AGI development requires enormous resources—computational infrastructure, talent, and data—which favors large technology companies and wealthy nations, raising concerns about power concentration in a small number of private entities, alignment of AGI systems with corporate rather than public interests, access disparities between developed and developing nations, and winner-take-all dynamics that could amplify existing inequalities.

Effective AGI governance would likely require new institutional arrangements, including international monitoring of frontier AI capabilities, robust auditing and evaluation frameworks, computing governance regimes to prevent misuse, and public-private partnerships balancing innovation and safety.

The potential impact of AGI is difficult to overstate. AGI could drive unprecedented progress across scientific discovery, potentially revolutionizing fields like medicine, materials science, and climate modeling; economic productivity through automation of complex cognitive work; enhancement of human capabilities through advanced human-AI collaboration; and solutions to currently intractable global challenges.

However, AGI also presents serious risks, including alignment problems (ensuring AGI systems robustly pursue human-aligned goals), security vulnerabilities (AGI systems might identify and exploit zero-day vulnerabilities across digital systems), deception (advanced systems might learn to deceive human operators to achieve their objectives), autonomous replication (systems might develop the capacity to self-replicate beyond human control), and social manipulation (AGI could potentially manipulate human psychology and social systems at scale).

A Framework for Gov AI Integration

For national leaders, a comprehensive approach to AI governance should include a coherent, whole-of-government national AI strategy with clear goals and priorities, coordination mechanisms across agencies, regular reassessment as technology evolves, and balance between innovation and safety.

Strategic investment should focus on fundamental research in AI safety and alignment, computing infrastructure as national strategic assets, education and workforce development, and public-private partnerships for responsible AI development.

Effective AI governance requires risk-based regulatory approaches proportional to potential harms, international coordination on standards and norms, mechanisms for independent assessment of high-risk systems, and protection of civil liberties in an age of AI surveillance.

Governments should lead by example through modernizing public services with responsible AI applications, ensuring transparency in governmental AI use, establishing procurement standards that prioritize safety and responsibility, and creating centers of excellence to share best practices.

AI for Everyone

The benefits of AI must be broadly shared to maintain social cohesion and public support. This requires universal high-speed internet access, cloud computing resources available to small businesses and entrepreneurs, and open datasets that enable innovation beyond large corporations.

Education and training initiatives should include K-12 AI literacy programs, mid-career retraining initiatives, university programs in AI development and governance, and public education about AI capabilities and limitations.

Inclusive development means addressing algorithmic bias through diverse development teams, ensuring AI systems work well across demographic groups, making AI tools accessible to people with disabilities, and supporting AI applications that address needs in developing regions.

Public engagement should involve meaningful consultation with affected communities, transparent communication about AI capabilities and limitations, mechanisms for citizen input on AI governance, and building public trust through responsible deployment.

A Presidential Approach to AI

For national leaders, AI represents both extraordinary opportunity and significant challenge. The nations that best navigate this technological transition will likely define the global order for decades to come.

The most effective presidential approach will combine clear-eyed assessment of AI capabilities without hype or fear, strategic investment in key technologies and human capital, thoughtful regulation that manages risks without stifling innovation, international cooperation on shared challenges, and commitment to ensuring AI benefits are broadly shared.

Above all, presidents must recognize that the technological capabilities of AI are developing faster than our social, political, and ethical frameworks for managing them. Closing this gap requires not just technical expertise but moral leadership and a long-term vision for how AI can enhance human flourishing rather than diminish it.

The nations that lead in AI will not necessarily be those with the most advanced technology, but those that most wisely integrate that technology into their societies, economies, and governance systems. As we potentially approach the development of Artificial General Intelligence, the stakes could hardly be higher, and the time to develop thoughtful, comprehensive approaches is now—before AGI capabilities materialize and potentially outpace our governance mechanisms.